Applying Domain Adaptation: X23D on Real Data

In our follow-up study, “Domain Adaptation Strategies for 3D Reconstruction of the Lumbar Spine Using Real Fluoroscopy Data”, we addressed the domain gap between synthetic training data and real intraoperative images. This study introduced a paired dataset combining synthetic and real fluoroscopic images, enabling our deep learning model to achieve robust 3D reconstructions directly from real X-rays. Utilizing transfer learning and style adaptation, the refined X23D model now provides real-time, high-accuracy spinal reconstructions with a computational time of just 81.1 ms. This advancement bridges critical gaps in surgical navigation, paving the way for enhanced surgical planning and robotics. Read more in the Medical Image Analysis journal.

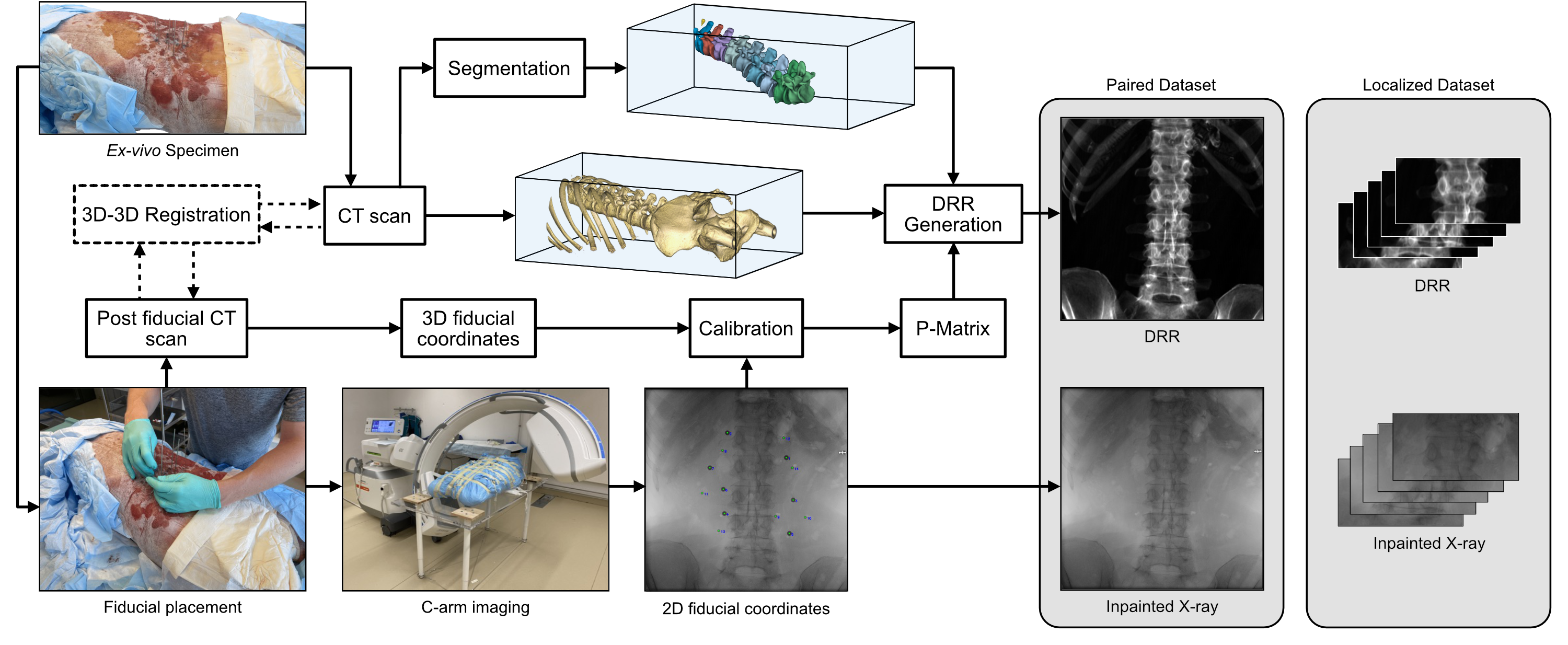

Figure 1: DRR Generation UI

Figure 2: Data Collection Pipeline

Figure 3: Results on Real and Synthetic Data